Kudos ROS Platform

Project Description

In the Spring semester of 2017, I convinced most of the active members of Computer Science House to buy a differential drive robot one of our members had built in his free time. I have set out on the endeavor of getting it to map out the floor using hector SLAM. On top of that, I am trying to implement a way for the robot to wander around in a partially "known" map. The goal would be to have the wandering explore the fringes of the map in hopes of expanding it. The current candidate is the hector navigation stack using the exploration node.

First Approach To The Problem

Before the robot was bought by Computer Science House, it only had remote control functionality via a wireless Xbox controller. After it was purchased, I outfitted the robot with a Neato XV11 Lidar, a webcam, and a Raspberry Pi 3. I set up hector SLAM on the Raspberry Pi using the LaserScan data from the XV11 Lidar. I set up the ros visualization tool Rviz to receive the map topic. I drove the robot around the floor to generate a map and localize within it. I encountered a huge latency issue as the Raspberry Pi was unable to perform SLAM in a timely fashion. A new approach was required.

Fixing the Latency Issue

The solution to this problem involved making use of the distributed computing features of ROS. CSH has a proxmox virtual machine cluster that members can make use of. I instantiated an Ubuntu VM running in our server room and installed ROS Kinetic packages on it. The distribution architecture was changed to have the robot publishing LaserScan Topics, which are received by hector SLAM running on the VM. A map is generated and the pose of the robot is computed. The map and pose topics are republished over the network. My laptop, running Rviz, subscribes to these topics and visualizes them.

This setup fixed the map topic latency issue. The next step was to setup a way to toggle between Xbox control of the robot, and autonomous navigation of floor.

A Sign Of Success

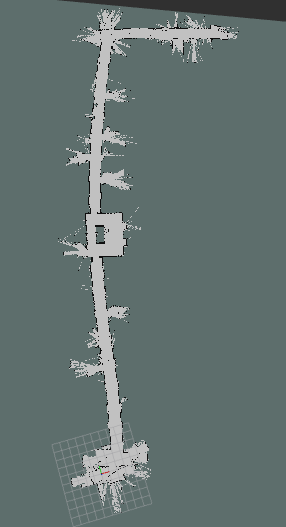

I was able to drive Kudos through the halls of CSH with the mapping node running on the virtual machine. I was able to watch a map of the floor come into being on my laptop's instance of Rviz. This was a very rewarding point in this project. I was on track to solve one of the most difficult components of this project. See a screenshot of the map made in Rviz below.

Now that the mapping node was working properly, I would need to get the navigation stack set in its entirety. Kudos would need to actuate itself through the current state of the global map. The biggest obstacle would be writing the motor controller node to convert cmd_vel topics into corresponding PWM signals needed to move the wheels appropriately.

Issues With Odometry

Issues were observed with obtaining odometry from laser scans. Unfortunately, hallways in CSH are not very feature-rich. This, coupled with the lower point resolution of the Lidar, resulted in very little usable odometry data. Some form of odometry is required for navigation, so this is a blocking issue. The solution to this problem would be to install encoders on the drivetrain. Due to the semester starting soon, and the complexity of this step, this portion of the project is on hiatus until further notice.